In today’s digital landscape, efficient data management lies at the core of successful Java applications. But why settle for basic when you can embrace advanced techniques to truly optimize your data handling? In this guide, we’ll dive into the importance of efficient data management in Java applications and why advanced techniques are essential.

From optimizing data structures to mastering concurrent data access, we’ll tackle the challenges head-on. Say goodbye to inefficient memory allocation and hello to streamlined serialization and deserialization. We’ll explore how leveraging Java libraries and frameworks can elevate your data management game, making tasks like database access and big data processing a breeze.

Join us as we uncover the complexities of advanced Java programming techniques, empowering you to take control of your data and unlock the full potential of your applications. Let’s dive in and discover the keys to efficient data management in the Java ecosystem.

Understanding Data Structures and Algorithms in Java

Understanding data structures and algorithms is fundamental to efficient data management in Java applications. Java provides a plethora of data structures, including arrays, lists, maps, sets, and trees, each serving distinct purposes. Arrays, for instance, offer quick access to elements by index, ideal for scenarios requiring random access. Lists, such as ArrayList and LinkedList, provide dynamic resizing and efficient insertion/deletion operations.

Choosing the right data structure is critical for optimizing performance. For instance, when dealing with key-value pairs, HashMap offers constant-time complexity for basic operations like insertion, deletion, and retrieval. However, if ordered traversal is needed, TreeMap provides sorted key-value pairs at the cost of slightly slower performance.

Moreover, understanding common algorithms for data manipulation and retrieval is essential. For instance, searching algorithms like binary search are efficient for sorted arrays, offering logarithmic time complexity. Sorting algorithms like quicksort and mergesort play a crucial role in organizing data efficiently, with variations suited for different use cases and data distributions.

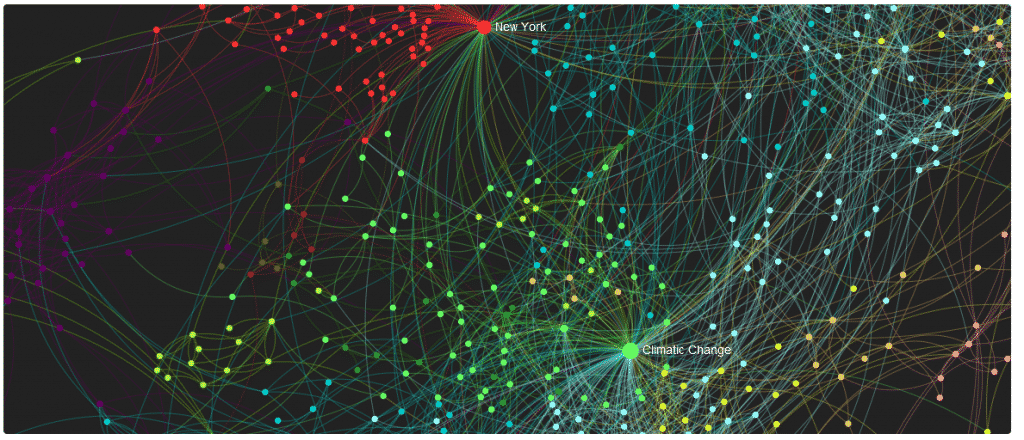

Additionally, mastering data structures and algorithms involves knowing when and how to apply them. For instance, graph algorithms like breadth-first search and depth-first search are indispensable for analyzing complex relationships between data points, such as in social networks or routing algorithms.

Advanced Data Management Techniques

Optimizing Data Structures

Advanced data management in Java involves optimizing data structures to suit specific use cases, thereby enhancing performance and efficiency. One technique is custom implementations of collections tailored to unique requirements. For instance, if frequent insertion and deletion operations are common, a custom linked list implementation might outperform standard ArrayList or LinkedList.

Additionally, optimizing data structures involves considering memory usage and access patterns. For example, using primitive data types instead of wrapper classes reduces memory overhead. Furthermore, minimizing the number of objects created can improve performance by reducing garbage collection overhead.

Moreover, leveraging data structure libraries such as Trove or Fastutil can provide specialized implementations optimized for specific use cases. These libraries offer alternatives to standard Java collections, providing better performance and memory utilization.

Memory Management

Efficient memory management is crucial for Java applications to optimize performance and resource utilization. Strategies for efficient memory allocation and deallocation involve careful consideration of object lifecycle and memory usage patterns. For example, using object pooling techniques can reduce the overhead of object creation and destruction by reusing objects instead.

Garbage collection, while essential for automatic memory management in Java, can impact performance if not managed properly. Understanding garbage collection algorithms such as the default mark-and-sweep algorithm or newer algorithms like G1 can help developers optimize garbage collection behavior for their specific application needs. Tuning garbage collection parameters such as heap size, garbage collection frequency, and pause times can significantly improve overall application performance.

Furthermore, minimizing object retention and avoiding memory leaks through proper resource management is essential. Techniques such as weak references or using try-with-resources for automatic resource management can help prevent memory leaks and improve overall application stability.

Concurrent Data Access

Concurrent data access poses challenges in Java applications, requiring careful handling to avoid race conditions and data inconsistencies. Understanding concurrency issues is essential, including techniques such as synchronization, locks, and concurrent collections.

Synchronization ensures that only one thread can access a critical section of code at a time, preventing data corruption. Locks provide finer-grained control over concurrency by allowing threads to acquire and release locks on specific resources as needed.

Java’s concurrency utilities, such as the java.util.concurrent package, offer powerful abstractions for managing concurrent access. Classes like ConcurrentHashMap and CopyOnWriteArrayList provide thread-safe alternatives to their non-concurrent counterparts, ensuring safe access in multi-threaded environments.

Best practices for concurrent data access include minimizing lock contention, using immutable objects where possible, and employing thread-safe data structures. Additionally, understanding thread synchronization and using appropriate synchronization mechanisms can help avoid deadlocks and performance bottlenecks.

Serialization and Deserialization

Serialization and deserialization are essential processes in Java for converting objects into byte streams and vice versa, enabling data transfer and persistence.

Serialization allows objects to be converted into a byte stream, making them suitable for transmission over networks or storage in files. Java provides built-in support for serialization through the Serializable interface, allowing objects to be serialized by default. However, for complex data structures or custom serialization requirements, developers can implement custom serialization logic using the Externalizable interface or custom serialization methods.

Efficient serialization involves minimizing the size of the serialized data and optimizing serialization/deserialization performance. Techniques such as using transient keywords to exclude non-essential fields, choosing appropriate serialization formats like JSON or Protocol Buffers, and employing compression techniques can help reduce the size of serialized data.

Similarly, efficient deserialization involves minimizing the overhead of reconstructing objects from byte streams. Techniques such as lazy loading and caching can help improve deserialization performance, especially for large or complex object graphs

Data Compression Techniques

Data compression techniques play a vital role in efficient data management in Java applications, enabling more efficient storage and transmission of data. Java offers various compression algorithms that developers can leverage to optimize their data management strategies.

One of the commonly used compression algorithms available in Java is the Deflate algorithm, which is utilized in popular compression formats like ZIP and GZIP. This algorithm efficiently compresses data by identifying repeated patterns and replacing them with shorter representations.

Additionally, Java provides support for other compression algorithms such as LZ4, Snappy, and Brotli, each with its own advantages and use cases. LZ4, for instance, is known for its high-speed compression and decompression, making it suitable for applications requiring real-time data processing.

Implementing data compression in Java involves selecting the appropriate compression algorithm based on factors like data type, compression ratio, and performance requirements. Developers can use libraries like Apache Commons Compress or Java’s built-in java.util.zip package to implement compression and decompression functionalities seamlessly.

Leveraging Java Libraries and Frameworks

By leveraging Java libraries and frameworks, developers can expedite development processes, reduce boilerplate code, and optimize data management tasks.

For database access, Java provides several popular libraries and frameworks. JDBC (Java Database Connectivity) is a standard API for connecting Java applications to relational databases, enabling developers to execute SQL queries and interact with database systems seamlessly. For higher-level ORM (Object-Relational Mapping) capabilities, frameworks like JPA (Java Persistence API) and Hibernate offer convenient abstractions for mapping Java objects to database tables, simplifying data access and manipulation.

In the domain of big data processing, Java developers can utilize the power of frameworks such as Apache Hadoop and Apache Spark. Hadoop provides distributed storage and processing capabilities, allowing applications to handle massive datasets across clusters of commodity hardware. Spark, on the other hand, offers in-memory processing and a more versatile computing model, making it suitable for real-time and iterative processing tasks.

Caching is another critical aspect of efficient data management, and Java offers a variety of libraries for implementing caching solutions. Ehcache is a widely used caching library that provides in-memory caching with support for distributed caching and caching strategies like LRU (Least Recently Used) and TTL (Time-To-Live). Redis is another popular caching solution that offers advanced data structures and features like persistence and pub/sub messaging, making it suitable for a wide range of caching use cases.

Performance Optimization Strategies

Profiling and benchmarking

Profiling involves analyzing the runtime behavior of an application to identify performance bottlenecks and areas for optimization. By profiling an application, developers can pinpoint specific methods or components that contribute most to the overall execution time, memory usage, or CPU utilization.

Java provides several tools for profiling applications, such as VisualVM, YourKit, and JProfiler. These tools offer features like CPU profiling, memory profiling, and thread analysis, allowing developers to gain insights into the runtime behavior of their applications.

Benchmarking, on the other hand, involves measuring the performance of an application or specific code snippets under controlled conditions. By benchmarking different implementations or optimization techniques, developers can compare their performance and make informed decisions about which approach to adopt.

Tools like JMH (Java Microbenchmark Harness) provide a framework for writing and running microbenchmarks in Java. JMH ensures reliable and accurate benchmarking results by handling warm-up iterations, measuring execution times, and performing statistical analysis.

Performance Tuning Techniques

Performance tuning is a critical aspect of optimizing Java applications for efficiency and speed. One strategy involves optimizing code performance by employing techniques such as loop unrolling and reducing object creation.

Loop unrolling aims to minimize loop overhead by manually expanding loop iterations, thereby reducing the number of loop control instructions executed. This technique can improve performance, especially in tight loops where the loop overhead becomes significant.

Reducing object creation involves minimizing the creation of unnecessary objects, which can lead to excessive garbage collection overhead. Instead of creating new objects repeatedly, developers can reuse existing objects or employ object pooling techniques to mitigate the overhead associated with object creation and destruction.

Additionally, leveraging JIT (Just-In-Time) compiler optimizations can significantly improve runtime performance. The JIT compiler dynamically analyzes and optimizes Java bytecode at runtime, identifying hotspots in the code and applying optimizations to improve execution speed.

Real-World Applications and Case Studies

Real-world applications of advanced Java techniques demonstrate their effectiveness in addressing complex data management challenges.

For instance, consider a large e-commerce platform that handles millions of transactions daily. By implementing efficient data management techniques such as database optimization using Hibernate, caching with Ehcache, and concurrent data access using Java’s concurrency utilities, the platform can ensure fast and reliable performance even under heavy loads. Case studies reveal significant improvements in response times and scalability, resulting in enhanced user experience and increased customer satisfaction.

In the banking sector, advanced Java techniques play a crucial role in ensuring secure and efficient data management. By utilizing encryption algorithms and secure communication protocols, banks can protect sensitive customer data while facilitating seamless transactions. Case studies demonstrate how Java’s security features, combined with effective data compression techniques, enable banks to optimize storage and transmission of financial data, improving operational efficiency and regulatory compliance.

Moreover, in the healthcare industry, Java applications are used for managing electronic health records (EHRs) and medical imaging data. By employing advanced data management techniques such as serialization and deserialization for efficient storage and retrieval of patient records, healthcare providers can streamline clinical workflows and improve patient care outcomes. Case studies showcase how Java frameworks like Spring and Apache Hadoop are utilized to build scalable and interoperable healthcare systems, facilitating data integration and analysis for medical research and decision support.

Conclusion

In conclusion, mastering advanced Java programming techniques for efficient data management is pivotal in today’s digital landscape. By optimizing data structures, employing concurrent data access strategies, and leveraging libraries and frameworks, developers can unlock the full potential of their Java applications.

Efficient data management not only enhances application performance but also improves scalability and reliability. By implementing performance optimization strategies such as profiling and benchmarking, developers can identify and address performance bottlenecks, ensuring smooth and responsive user experiences.

Furthermore, techniques like serialization and deserialization, along with data compression, enable efficient storage and transmission of data, reducing resource consumption and improving overall system efficiency.

Real-world applications of these advanced techniques demonstrate their effectiveness in addressing complex data management challenges across various industries, from e-commerce to healthcare. By adopting these techniques, businesses can streamline operations, enhance security, and drive innovation.

Ultimately, keeping pace with the latest advancements and best practices in Java programming, developers can stay ahead in the competitive landscape and deliver exceptional software solutions that meet the evolving needs of users and businesses alike. Happy Coding and Best of Luck!